Service that suites your needs

Our custom software development process revolves around an AI-centric approach, enhancing user experiences and delivering highly efficient solutions through advanced artificial intelligence technologies.

.png?width=292&height=132&name=Image%20(3).png)

Our custom software development process revolves around an AI-centric approach, enhancing user experiences and delivering highly efficient solutions through advanced artificial intelligence technologies.

.png?width=292&height=132&name=Image%20(3).png)

At Phyniks, we combine AI and creativity to drive innovation. Our tailored solutions yield extraordinary results. Explore our knowledge base for the latest insights, use cases, and case studies. Each resource is designed to fuel your imagination and empower your journey towards technological brilliance.

.png?width=284&height=129&name=Image%20(4).png)

At Phyniks, we combine AI and creativity to drive innovation. Our tailored solutions yield extraordinary results. Explore our knowledge base for the latest insights, use cases, and case studies. Each resource is designed to fuel your imagination and empower your journey towards technological brilliance.

.png?width=284&height=129&name=Image%20(4).png)

Your AI is dumber than you think and how to fix it.

Let’s be honest: your current AI assistant, whether it's an LLM-powered chatbot or a generative tool, is probably a bit of a disappointment.

You’ve seen the demos, read the articles, and you’re convinced this technology will transform your business. But then you try to get your AI to do something useful, and it falls flat.

You’re left with a tool that’s incredibly smart at one thing, creating text but frustratingly inept at everything else.

This isn't a problem with the AI model itself; it's a problem with its lack of connection to the real world. Think of it like a brilliant strategist who can draw up a flawless battle plan but has no way to communicate with their troops or see the battlefield. Their genius is useless.

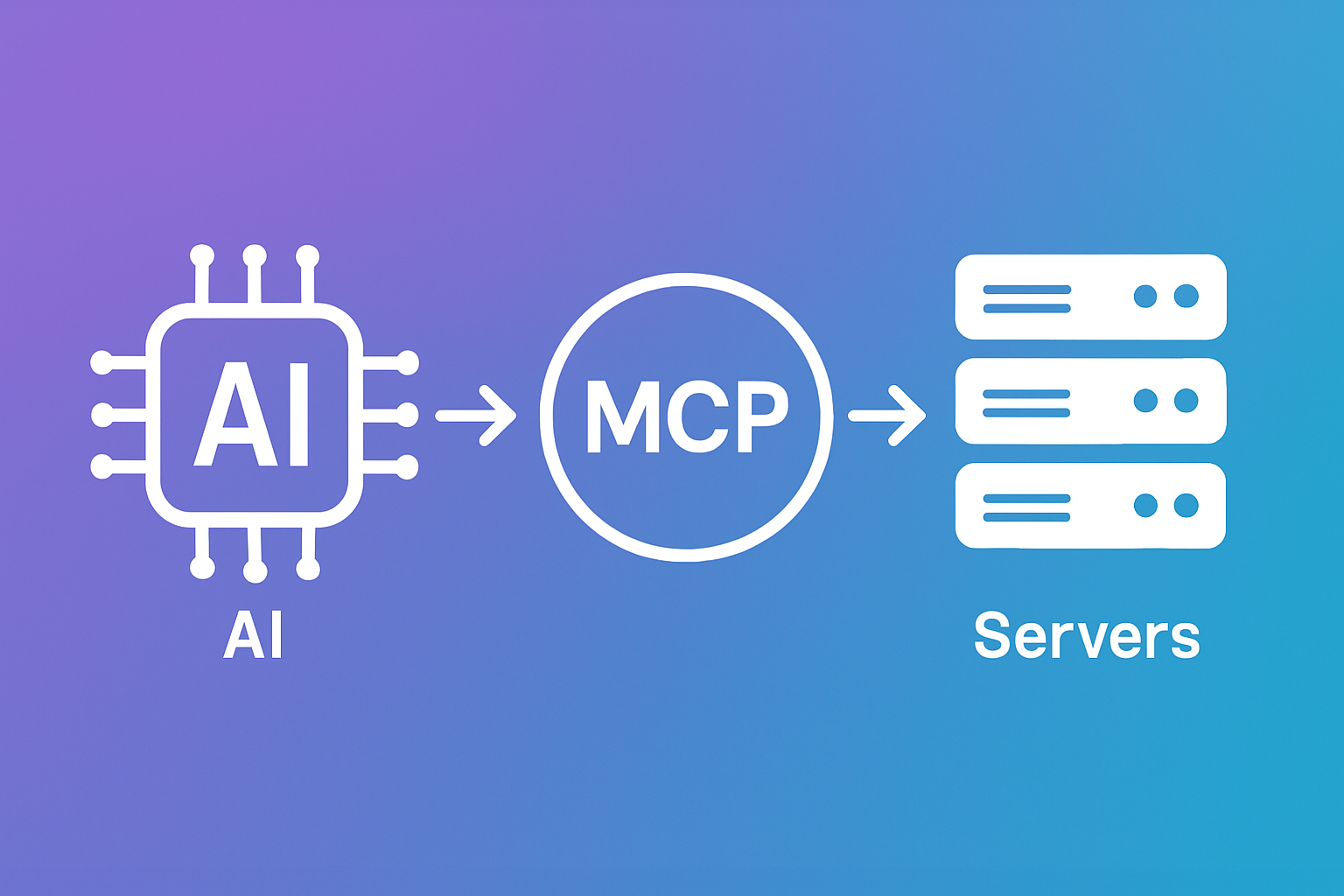

This is the current state of enterprise AI: a powerful brain with no hands and no eyes. This is where the Model Context Protocol (MCP) comes in, and it's the most significant leap forward for business AI since the launch of the first large language models.

Before we dive into what MCP is, let's talk about the way things used to work. When you wanted to connect an AI to your business systems, you had to use an API (Application Programming Interface).

An API is like a menu at a restaurant; it lists all the things you can order and how to order them. But if you wanted to connect your AI to a dozen different systems, your CRM, your ERP, your marketing automation platform, your inventory software, you had to build a dozen different integrations.

Each one was a custom job, a unique piece of code, and it was a headache to manage. This is a big reason why enterprise adoption of AI agents has lagged.

According to a recent study by Gartner, a staggering 78% of businesses report that integration challenges are a major roadblock to scaling their AI initiatives.

MCP changes this.

Imagine a universal charging port for every electronic device. That’s what MCP is for AI. It's an open standard, a common "language," that allows any AI model to communicate with any external tool, database, or service without needing a custom, one-off integration. Developed by Anthropic and later open-sourced, MCP defines a structured, secure, and standardized way for AI to not just retrieve information but to also take action.

At its core, MCP is a client-server architecture designed specifically for AI. It has three main components that work together seamlessly:

The beauty of this architecture is that the host (your AI) doesn't need to know the specific details of every tool. It just needs to speak MCP. This allows for a truly plug-and-play ecosystem where you can add new capabilities to your AI simply by connecting it to a new MCP server.

To truly understand the impact of the Model Context Protocol, it's helpful to see a side-by-side comparison of how business AI operated before this open standard and how it works now.

| Feature / Aspect | Before MCP (Traditional APIs) | With MCP (Model Context Protocol) |

|---|---|---|

| Integration Method | Custom, point-to-point integrations. Each AI model required a unique, hard-coded API integration for every external system (CRM, ERP, etc.). | Standardized, plug-and-play architecture. An AI model connects to any number of MCP servers using one universal protocol, eliminating the need for custom code for each new tool. |

| AI Capabilities | Primarily conversational and knowledge-based. AI could access information but was limited in its ability to take action. Automation was often manual or required complex orchestration. | Agentic and action-oriented. AI can not only retrieve information but also perform multi-step tasks across different systems, turning it into a true "doer." |

| Data Access | Static and prone to hallucination. The AI relies on its pre-trained data or one-off API calls, which can lead to outdated or inaccurate information. | Real-time and grounded. The AI securely and instantly connects to proprietary, live data sources, drastically reducing hallucinations and making outputs more factual and reliable. |

| Development & Scalability | Slow and costly. Adding a new capability or tool to the AI required a dedicated development effort, creating an "M x N" problem (every model needed to be connected to every tool). | Fast and efficient. Developers can build a single MCP server for a tool, and any compatible AI can instantly use it. This simplifies development and allows for rapid scaling of AI capabilities. |

| Security & Control | Fragmented and complex. Managing security and permissions was a per-integration challenge, with the risk of exposing too much data through a poorly configured API. | Built-in and granular. The MCP architecture provides a secure, isolated channel for each request. Permissions can be managed at a granular level, ensuring the AI can only access what it needs to for a specific task. |

| Ecosystem | Closed and siloed. Integrations were often proprietary or required complex middleware, hindering collaboration and the adoption of open-source tools. | Open and collaborative. As an open standard, MCP fosters a new ecosystem where developers can easily share servers and clients, promoting interoperability and reducing vendor lock-in. |

This shift from a conversational tool to an AI agent is the biggest impact of Model Context Protocol. A traditional LLM is a conversationalist; an AI agent is a doer. While a standard LLM can tell you what to do, an MCP-enabled agent can actually do it.

Think about a simple business workflow: a potential customer fills out a form on your website. Without MCP, an AI might be able to draft a follow-up email, but you would still need a human to manually copy the customer’s information into your CRM, assign a sales rep, and send the email. With an MCP-enabled agent, a single command can trigger a chain of actions:

The entire process is automated, end-to-end, without a single human intervention. This isn't just a hypothetical scenario. Businesses are already using MCP to streamline everything from IT ticket management and employee onboarding to real-time inventory updates and personalized marketing campaigns.

One of the most persistent problems with LLMs is "hallucination," where the model generates plausible-sounding but factually incorrect information. Hallucinations happen because the AI is relying on its internal, static training data, which may be outdated or simply wrong. A study by Vectara found that LLMs without grounding can hallucinate as much as 3% of the time, and while that might not sound like a lot, it can lead to catastrophic business errors.

MCP directly tackles this problem by securely connecting LLMs to internal, proprietary data sources. Instead of the AI pulling an answer from its generic training data, it can use an MCP server to query your company’s real-time, authoritative data.

For example, if a customer support bot needs to know the status of an order, it doesn't need to guess. It can use an MCP server to connect to your live database, find the customer's order, and get the exact, current status.

This not only dramatically reduces hallucinations but also makes the AI's output far more factual, reliable, and trustworthy.

You might be thinking, "This sounds a lot like RAG." And while both Retrieval-Augmented Generation (RAG) and MCP are designed to ground AI models in external data, they serve different, complementary purposes.

Think of it this way: RAG helps the AI know things, while MCP helps the AI do things. In a real-world scenario, you would likely use both. An AI agent might use a RAG system to find the right information in a knowledge base and then use an MCP server to take a specific action based on that information.

The Model Context Protocol (MCP) creates an ecosystem for AI that's far more efficient and flexible than traditional integrations. For a business leader, this translates into a few key advantages:

Instead of developing a new, custom integration for every AI tool you want to use, you only need to create an MCP server for your internal systems. Think of a server as a secure, standardized adapter for your company's data and tools. Once you have a server for your CRM, for example, any MCP-compatible AI assistant, whether it's an internal tool or a third-party application, can securely connect to it. This cuts down on development time and cost, making it much easier to scale AI across your organization.

Before MCP, AI was mostly a passive tool, like a search engine or a chatbot that could only answer questions. With MCP, the AI can become an active agent within your business. It's not just "reading" information; it's "doing" things. For a business owner, this means your AI can:

This shift moves AI from being a helpful assistant to a core operational tool that can handle complex business tasks on its own.

MCP isn't owned by a single company; it's an open standard. This is a crucial distinction from closed, proprietary systems. An open standard means that as the MCP ecosystem grows, your business benefits. Developers and vendors are building new MCP servers for popular tools like Google Drive, GitHub, and Salesforce, which means you have a growing library of pre-built integrations you can use right out of the box.

This open ecosystem fosters innovation, reduces vendor lock-in, and ensures that your AI investments are future-proof.

The next generation of enterprise AI won't just talk to you; it will work alongside you. It will be an autonomous doer, a proactive agent that can execute complex, multi-step tasks across your entire software ecosystem. This future isn't a decade away; it's being built today, and Model Context Protocol (MCP) is the blueprint.

The businesses that will win are the ones who move beyond the hype and start building practical, helpful AI solutions that connect to their most valuable assets: their own data and tools. The biggest challenge isn't the AI itself, but the plumbing that connects it to your business.

If you're a business leader or a marketer looking to unlock the full potential of generative AI, the time to move is now. We specialize in building custom generative AI solutions that leverage the power of protocols like MCP to integrate seamlessly with your business. Stop settling for a smart AI that can't do anything and start building a solution that can take action.

Contact us today to explore how we can help you build an intelligent, integrated AI that works for you.

Sign up for Links for Thinks — a weekly roundup of resources like this to help you uplevel your design thinking straight to your inbox

We'd love to hear from you! Whether you have a question about our services, want to discuss a potential project, or just want to say hi, we are always here to have meaningful conversations.